Google Reveals Technical Specs and Business Rationale for TPU Processor

- Details

- Published: Thursday, 06 April 2017 16:27

|

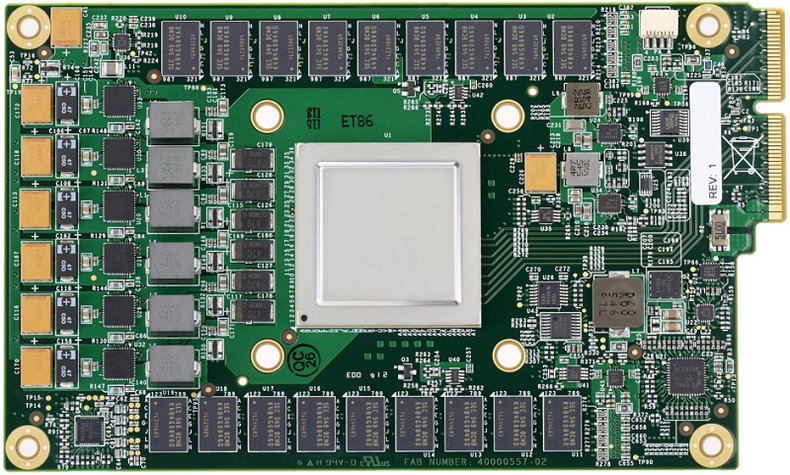

Photo: google.com |

Although Google’s Tensor Processing Unit (TPU) has been powering the company’s vast empire of deep learning products since 2015, very little was known about the custom-built processor. This week the web giant published a description of the chip and explained why it’s an order of magnitude faster and more energy-efficient than the CPUs and GPUs it replaces.

First a little context. The TPU is a specialized ASIC developed by Google engineers to accelerate inferencing of neural networks, that is, it speeds the production phase of these applications for networks that have already been trained. So, when a user initiates a voice search, asks to translate text, or looks for an image match, that’s when inferencing goes to work. For the training phase, Google, like practically everyone else in the deep learning business, uses GPUs.

The distinction is important because inferencing can do a lot of its processing with 8-bit integer operations, while training is typically performed with 32-bit or 16-bit floating point operations. As Google pointed out in its TPU analysis, multiplying 8-bit integers can use six times less energy than multiplying 16-bit floating point numbers, and for addition, thirteen times less.

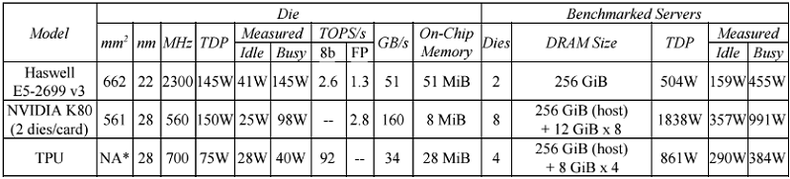

The TPU ASIC takes advantage of this by incorporating an 8-bit matrix multiply unit that can perform 64K multiply accumulate operations in parallel. At peak output, it can deliver of 92 teraops/second. The processor also has 24 MiB of on-chip memory, a relatively large amount for a chip of its size. Memory bandwidth, however, is a rather meagre 34 GB/second. To optimize energy usage, the TPU runs at a leisurely 700 MHz and draws 40 watts of power (75 watts TDP). The ASIC is manufactured on the 28nm process node.

When it comes to computer hardware, energy usage is the prime consideration for Google, since it’s tied directly to total cost of ownership (TCO) in datacenters. And for hyperscale-sized datacenters, energy costs can escalate quickly with hardware that’s too big for its intended task. Or as the authors of the TPU analysis put it: “when buying computers by the thousands, cost-performance trumps performance.”

What Google specialists found was that their TPU performed 15 to 30 times faster than the K80 GPU and Haswell CPU across these benchmarks. Performance/watt was even more impressive, with the TPU beating its competition by a factor of 30 to 80. Google projects that if they used the higher-bandwidth GDDR5 memory in the TPU, they could triple the chip’s performance.